Given that data is by nature messy and uncertain, how can a prediction or decision based on data be prevented from being gullible? In the context of loan applications as an extreme example such gullible decisions may even cause societal harm. Recently, modern deep learning systems which are currently employed to make high-stakes decisions can be sensitive to imperceptible small corruptions to their sensory data. I believe it is paramount that future data-driven tools which make decisions which have broad societal impact are safeguarded explicitly against such data corruption and their potential adverse effects. All practical data is subject to at least one or even several forms of data corruption. Two distinct types of data corruption are perhaps most common: stochastic corruption due to limited data or measurement noise and (ii) adversarial corruption caused by bad actors. I believe that distributional robustness is a promising framework to safeguard decisions against both types of corruption.

Robust Optimization

A core issue in decision-making is dealing with uncertainty. The loss $L(z, \xi)$ associated with any decision $z$ most often depends on circumstances $\xi$ beyond our control. A naive way would be to simply ignore uncertainty and to assume $\xi$ to take its most common or average value. Several competing more advanced perspectives on uncertainty can be considered.

Adverserial: The adversial perspective takes the uncertain parameter $\xi$ to belong a set $\Xi$. Its value is otherwise unknown which directly motivates a worst-case formulation

\begin{equation} \min_{z\in Z} \max_{\xi\in\Xi} ~ L(z, \xi). \end{equation}

Evidently the adverserial perspective suggests decisions which are prepared for the worst scenarios within the support set $\Xi$. For a large class of loss functions $L$ and sets $\Xi$ the worst-case formulation are computationally tractable. The adverserial perspective is widely used as it handles uncertainty in a tractable fashion with only the set $\Xi$ as primitive. A fair critisism, though, is that adverserial decisions may be pessimistic and fail to do well when the worst-case scenario does not materialize.

Stochastic: It may be the case that there are no real reasons to believe that the uncertain parameters $\xi$ are chosen with ill will. Taking an adverserial perspective is hence not always justified. Assuming the parameter $\xi$ to follow a distribution $P$ can be more reasonable and in fact has a longer history in the literature. When decisions need are to be made repeatedly over time we may look at a stochastic formulation

\begin{equation} \min_{z\in Z} ~\mathbf{E}_P \left[L(z, \xi)\right]. \end{equation}

The stochastic perspective is typically more computationally demanding. Even worse, the optimal decision can be extremely sensitive to the distributional parameter $P$ which in practice must be estimated from noisy historical data.

Ambiguous: It should be noted that in the adversarial and stochastic perspective the nature of the uncertain parameter is very different. Nevertheless, both the adversarial and stochastic perspective can be unified by considering a distributionally robust formulation instead

\begin{equation} \min_{z\in Z} \max_{P\in \mathcal P}~\mathbf{E}_P \left[L(z, \xi)\right] \end{equation}

which assumes that the distribution of the uncertain parameter is itself ambiguous. An ambiguity set consisting of a singleton $\mathcal P= \{ P \}$ corresponds to a stochastic perspective. An ambiguity set $\{ P : {\rm{supp}}(P)\subseteq \Xi\}$ consisting of all distribution supported on $\Xi$ captures the worst-case formulation. The ambigious perspective thus permits to study the adversarial and stochastic perspective simultaneously.

The methodology of distributional robust decisions is a natural fit for the optimal power flow in electricity networks facing uncertain demand and load alleviation of wind turbine blades experiencing turbulance.

Related publications

-

Roald, L., F. Oldewurtel, B.P.G. Van Parys, and G. Anderson (2015). “Security constrained optimal power flow with distributionally robust chance constraints”. Technical Report. Link

-

Van Parys, B.P.G., B.-F. Ng, P.J. Goulart, and R. Palacios (2014). “Optimal control for load alleviation in wind turbines”. In: 32nd ASME Wind Energy Symposium. National Harbor, Maryland, USA. Link

Prescriptive Analytics

Operations research has had tremendous practical impact by combining predictive models with powerful optimization tools. Prescriptive problems with covariate information

\begin{equation} z(x) := \arg\min_{z\in Z} ~ \mathbf{E}_{M}\left[L(z, Y) | X= x\right] \end{equation}

describe many modern decision problems and take a central role in my research.

Example (Routing): Your travel time $L(z, Y)$ to go from the office back home depends not only on the chosen route $z$ but also on also on the traffic $Y$ along the way. Before deciding the route home contextual information $X= x$ can be obtained. We may have noticed that it is snowing. As the trip home typically needs to be made repeatedly, minimizing the expected traveltime conditioned on the context is the way to go.

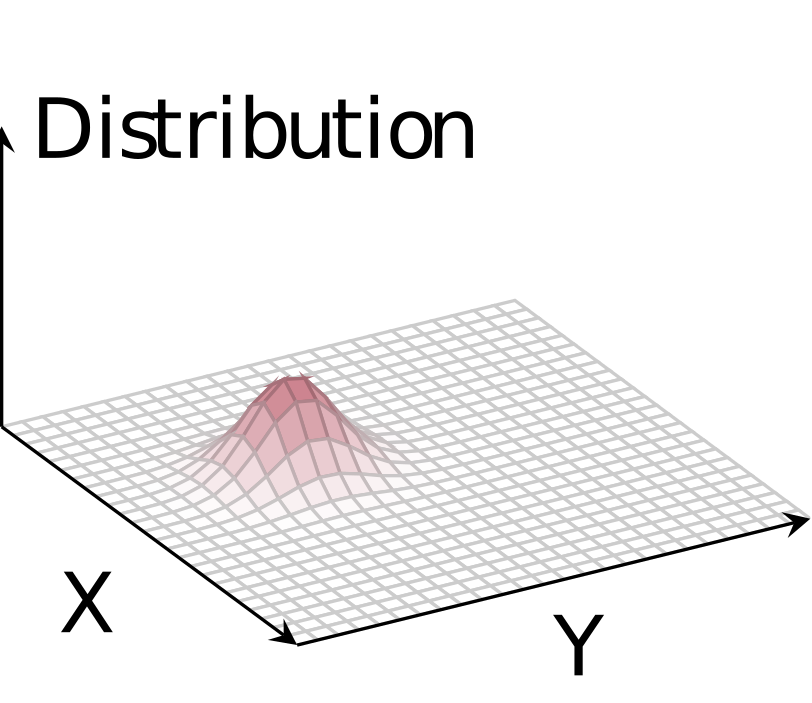

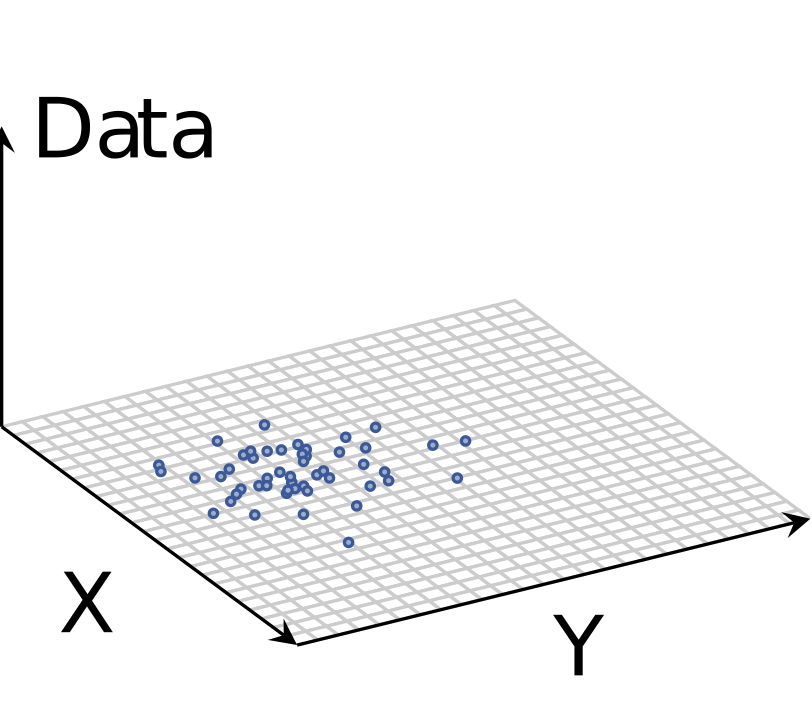

The decision $z(x)$ is however based on a distributional model $M$ describing the stochastic nature between the uncertain parameter $Y$ and all covariates $X$. Distributional models are never directly observed in practice. Data on the other hand is increasingly becoming easier to obtain, store and process. A lot of opportunity is to be found in method which can leverage this data to make more informed decisions. Making decisions direclty based on historical data instead of distributional models is a core topic in my research.

Making decisions based directly on data instead of models requires however some care. Predictions as well as prescriptions calibrated to one particular set of data need indeed not perform well out-of-sample. From botched election forecasts to fooled neural networks, examples abound of gullible approaches to data-driven decision-making. Safeguarding prescriptions against over-calibration to one particular training data set using robust optimization is an important direction in my research.

Related publications

-

Van Parys, B.P.G. and M.A. Bennouna (2022). “Robust Two-Stage Optimization with Covariate Data”. Working paper. Link

-

Bertsimas, D. and B.P.G. Van Parys (2022). “Bootstrap robust prescriptive analytics”. In: Mathematical Programming 195, pp. 39–78. Link

Tractability

Identifying sets of distributions $\mathcal P$ and loss functions $L$ which ultimately yield tractable distributionally robust formulations has been a core topic in my research. The main focus has been on sets of distributions represented through moment conditions such as the mean and variance. Under rather mild conditions the resulting distributionally robust formulations admit reductions to semidefinite optimization. Sets defined through moments typically include many pathological members and can render the assiated robust formulations rather pessimistic. A priori shape information such as unimodality and monotonicity of the common generating distribution can also be incorporated mitigating the resulting pessimism. Recently, Wasserstein or optimal transprort based ambiguity sets have received a lot of attention as they have nice statistical properties and admit a tractable reformulation. Nevertheless, the Wasserstein formulations can become rather computational demanding when working with a large number of historical data points. In recent work I have advanced mean robust ambiguity formulations which are best thought of as interpolating between an adversarial robust formulation and a Wasserstein distributional robust formulation. Such mean robust ambiguity formulations enjoy a reduced computional burden when compared to a Wasserstein distributional robust formulation as the number of data points is reduced via an apriori clustering procedure.

Related publications

-

Wang, I., C. Becker, B.P.G. Van Parys, and B. Stellato (2022). “Mean Robust Optimization”. In: Operations Research. Major Revision. Won the 2022 INFORMS Computing Society Student Paper Prize. Link

-

Van Parys, B.P.G., P.J. Goulart, and M. Morari (2019). “Distributionally robust expectation inequalities for structured distributions”. In: Mathematical Programming 173.1-2, pp. 251–280. Link

-

Van Parys, B.P.G., P.J. Goulart, and D. Kuhn (2016). “Generalized Gauss inequalities via semidefinite programming”. In: Mathematical Programming 156.1-2. Finalist George Nicholson student paper competition, pp. 271–302. Link

-

Van Parys, B.P.G., D. Kuhn, P.J. Goulart, and M. Morari (2016). “Distributionally robust control of constrained stochastic systems”. In: IEEE Transactions on Automatic Control 61.2, pp. 430–442. Link

-

Van Parys, B.P.G., P.J. Goulart, and P. Embrechts (2016). “Frechet inequalities via convex optimization". Technical Report. Link